Sussex-Huawei Locomotion Challenge 2025

The Sussex-Huawei Locomotion Dataset [1-2] will be used in an activity recognition challenge with results to be presented at the HASCA Workshop at Ubicomp 2025.

This seventh edition of the challenge follows on our very successful 2018, 2019, 2020, 2021, 2023 and 2024 challenges, which saw the participation of more than 130 teams and 300 researchers [4, 6, 7].

Motivated by the growing interest in foundation models, particularly large language models, this year’s edition will explore their application to transportation mode recognition. The goal is to accurately recognize eight modes of locomotion and transportation (activities) in a user-independent manner. Participants will be required to develop a solution that leverages a recognized and well-established foundation model, such as time series models like TimesNet, Chronos, or even models from other domains including language (GPT, BERT) and vision (Flamingo), among others. The provided dataset includes training, validation, and testing sets. Each submission should implement an algorithmic pipeline that utilizes foundation models to process sensor data, build predictive models, and output the recognized activities.

This year we will host two tasks. Read carefully the instruction of each task on this page, as they have different evaluations and rules.

Participants have the freedom to choose to attend either one or both tasks.

Deadlines

- Registration via email: as soon as possible, but not later than

30.04.202510.05.2025 - Challenge duration: 20.04.2025 –

16.06.202526.06.2025 - Submission deadline:

16.06.202526.06.2025 - HASCA-SHL paper submission:

20.06.202530.06.2025 - HASCA-SHL review notification:

30.06.202514.07.2025 - HASCA-SHL camera ready submission: 21.07.2025

- UbiComp early-bird registration: TBD

- HASCA workshop: 12-13th October (TBC), 2025 in Espoo, Finland.

- Release of the ground-truth of the test data: TBD

Registration

Each team should send a registration email to shldataset.challenge@gmail.com as soon as possible but not later than 30.04.2025, stating the:

- The name of the team

- The names of the participants in the team

- The organization/company (individuals are also encouraged)

- The contact person with email address

HASCA Workshop

To be part of the final ranking, participants will be required to submit a detailed paper to the HASCA workshop. The paper should contain technical description of the processing pipeline, the algorithms and the results achieved during the development/training phase. The submissions must follow the HASCA format, but with a page limit between 3 and 6 pages.

Task 1

Task 1 focuses on in-domain setting, where the training and testing data are both from the SHL dataset. The goal is to accurately recognize eight modes of locomotion and transportation (activities) in a user-independent manner. Participants will be required to develop a solution that leverages a recognized and well-established foundation model, such as time series models like TimesNet, Chronos, or even models from other domains including language (GPT, BERT) and vision (Flamingo), among others. The provided dataset includes training, validation, and testing sets. Each submission should implement an algorithmic pipeline that utilizes foundation models to process sensor data, build predictive models, and output the recognized activities.

Prizes

- 800 £

- 400 £

- 200 £

Dataset and format

The data is divided into three parts: train, validate and test. The data comprises of 59 days of training data, 6 days of validation data and 28 days of test data. The train, validation and test data was generated by segmenting the whole data with a non-overlap sliding window of 5 seconds.

The train data contains the raw sensors data from one user (user 1) and four phone locations (bag, hips, torso, hand). It also includes the activity labels (class label). The train data contains four sub-directories (Bag, Hips, Torso, Hand) with the following files in each sub-directory:

- Acc_*.txt (with * being x, y, or z): acceleration

- Gyr_*.txt: rate of turn

- Magn_*.txt: magnetic field

- Label.txt: activity classes.

Each file contains 196072 lines x 500 columns, corresponding to 196072 frames each containing 500 samples (5 seconds at the sampling rate 100 Hz). The frames for the train data are consecutive in time. The validation data contains the raw sensors data from the other two users (mixing user 2 and use 3) and four phone locations (bag, hips, torso, hand), with the same files as the train dataset. Each file in the validation data contains 28789 lines x 500 columns, corresponding to 28789 frames each containing 500 samples (5 seconds at the sampling rate 100 Hz). In each data frame, one or multiple sensor modalities are randomly missing by setting the sensor data to zero. The validation data is extracted from the already released preview of the SHL dataset.

The test data comprises contains the raw sensors data from the other two users (mixing user 2 and use 3) and three phone locations (bag, hips, torso), with the same files as the train dataset but no class label. In each data frame, one or multiple sensor modalities are randomly missing by setting the sensor data to zero. This is the data on which ML predictions must be made. The files contain 92726 lines x 500 columns (5 seconds at the sampling rate 100 Hz). In order to challenge the real time performance of the classification, the frames are shuffled, i.e. two successive frames in the file are likely not consecutive in time. ————————–

Downloads

Data to be released on 20.04.2025.

Download train data

- Traininig set – Bag (3.0 GB)

- Traininig set – Hand (3.2 GB)

- Traininig set – Hips (3.1 GB)

- Traininig set – Torso (3.0 GB)

Download validation data

Download test data

Download example submission

Submission of predictions on the test dataset

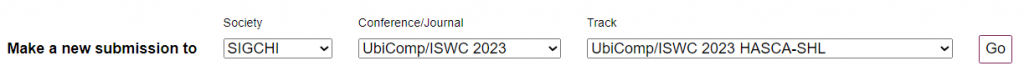

The participants should submit a plain text prediction file (e.g. “teamName_predictions.txt), containing the a matrix of size 92726 lines x 500 columns corresponding to each sample in the testing dataset. An example of submission is available. The participants’ predictions should be submitted online by sending an email to shldataset.challenge@gmail.com, in which there should be a link to the predictions file, using services such as Dropbox, Google Drive, etc. In case the participants cannot provide links using some file sharing service, they should contact the organizers via email shldataset.challenge@gmail.com, which will provide an alternate way to send the data. To be part of the final ranking, participants will be required to publish a detailed paper in the proceedings of the HASCA workshop. The date for the paper submission is 20.06.2025. All the papers must be formatted using the ACM SIGCHI Master Article template with 2 columns. The template is available at TEMPLATES ISWC/UBICOMP2025. Submissions do not need to be anonymous. Submission is electronic, using precision submission system. The submission site is open at https://new.precisionconference.com/submissions (select SIGCHI / UbiComp 2025 / UbiComp 2025 Workshop – HASCA-SHL and push Go button). See the image below.  A single submission is allowed per team. The same person cannot be in multiple teams, except if that person is a supervisor. The number of supervisors is limited to 3 per team. One supervisor can cover up to 3 teams.

A single submission is allowed per team. The same person cannot be in multiple teams, except if that person is a supervisor. The number of supervisors is limited to 3 per team. One supervisor can cover up to 3 teams.

Task 2

Task 2 will focus on cross-domain settings, where we will use our newly acquired activity recognition dataset as the testing dataset. Unlike Task 1, participants for Task 2 are allowed to use multiple open datasets of their choice other than SHL datasets, together with LLMs such as GPT4, Gemini, Claude, and so on. Additionally, Task 2 will permit participants to use both labeled and unlabeled data of their choice.

The details of Task 2 are available on this page

Rules

Some of the main rules are listed below. These rules apply to both tasks, unless differently specified under each task’s section. The detailed rules are contained in the following document.

- Eligibility

- You do not work in or collaborate with the SHL project (http://www.shl-dataset.org/);

- If you submit an entry, but are not qualified to enter the contest, this entry is voluntary. The organizers reserve the right to evaluate it for scientific purposes. If you are not qualified to submit a contest entry and still choose to submit one, under no circumstances will such entries qualify for sponsored prizes.

- Entry

- Registration (see above): as soon as possible but not later than 30.04.2025.

- Challenge: Participants will submit prediction results on test data.

- Workshop paper: To be part of the final ranking, participants will be required to publish a detailed paper in the proceedings of the HASCA workshop (http://hasca2023.hasc.jp/); The dates will be set during the competition.

- Submission: The participants’ predictions should be submitted online by sending an email to shldataset.challenge@gmail.com, in which there should be a link to the predictions file, using services such as Dropbox, Google Drive, etc. In case the participants cannot provide link using some file sharing service, they should contact the organizers via email shldataset.challenge@gmail.com, which will provide an alternate way to send the data.

- A single submission is allowed per team. The same person cannot be in multiple teams, except if that person is a supervisor. The number of supervisors is limited to 3 per team.

- Evaluation

- The challenge this year will explore the application of foundation models to transportation mode recognition. While the performance will be evaluated using the testing data, the rank of the submission will be mainly based novelty of the method described in the workshop paper, as long as the recognition performance is above the average of all the submissions.

Q&A

Contact

All inquiries should be directed to: shldataset.challenge@gmail.com

Organizers

- Dr. Lin Wang, Queen Mary University of London (UK)

- Prof. Daniel Roggen, University of Sussex (UK)

- Dr. Mathias Ciliberto, University of Cambridge (UK)

- Prof. Hristijan Gjoreski, Ss. Cyril and Methodius University (MK)

- Dr. Kazuya Murao, Ritsumeikan University (JP)

- Dr. Tsuyoshi Okita, Kyushu Institute of Technology (JP)

- Dr. Paula Lago, Concordia University in Montreal (CA)

References

[1] H. Gjoreski, M. Ciliberto, L. Wang, F.J.O. Morales, S. Mekki, S. Valentin, and D. Roggen, “The University of Sussex-Huawei locomotion and transportation dataset for multimodal analytics with mobile devices,” IEEE Access 6 (2018): 42592-42604. [DATASET INTRODUCTION]

[2] L. Wang, H. Gjoreski, M. Ciliberto, S. Mekki, S. Valentin, and D. Roggen, “Enabling reproducible research in sensor-based transportation mode recognition with the Sussex-Huawei dataset,” IEEE Access 7 (2019): 10870-10891. [ DATASET ANALYSIS ]

[3] L. Wang, H. Gjoreski, M. Ciliberto, S. Mekki, S. Valentin, and D. Roggen, “Benchmarking the SHL recognition challenge with classical and deep-learning pipelines,” in Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, pp. 1626-1635, 2018. [BASELINE FOR MOTION SENSORS]

[4] L. Wang, H. Gjoreski, M Ciliberto, P. Lago, K. Murao, T. Okita, D. Roggen, “Three-Year review of the 2018–2020 SHL challenge on transportation and locomotion mode recognition from mobile sensors,” Frontiers in Computer Science, 3(713719): 1-24, Sep. 2021. [SHL 2018-2020 SUMMARY][Motion]

[5] L. Wang, M. Ciliberto, H. Gjoreski, P. Lago, K. Murao, T. Okita, and D. Roggen, “Locomotion and transportation mode recognition from GPS and radio signals: Summary of SHL challenge 2021,” Adjunct Proc. 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proc. 2021 ACM International Symposium on Wearable Computers (UbiComp’ 21), 412-422, Virtual Event, 2021. [SHL 2021 SUMMARY][GPS+Radio]

[6] L. Wang, M. Ciliberto, H. Gjoreski, P. Lago, K. Murao, T. Okita, and D. Roggen, “Summary of SHL challenge 2023: Recognizing locomotion and transportation mode from GPS and motion sensors,” Adjunct Proc. 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proc. 2023 ACM International Symposium on Wearable Computers (UbiComp’ 23), 575-585, Mexico, 2023. [SHL 2023 SUMMARY][Motion+GPS]

[6] L. Wang, M. Ciliberto, H. Gjoreski, P. Lago, K. Murao, T. Okita, and D. Roggen, “Summary of SHL challenge 2024: Motion sensor-based locomotion and transportation mode recognition in missing data scenarios,” UbiComp ’24: Companion of the 2024 on ACM International Joint Conference on Pervasive and Ubiquitous Computing , 555-562, Melbourne, Australia, Oct. 2024. [SHL 2024 SUMMARY][Motion]