Sussex-Huawei Locomotion Challenge 2020

The Sussex-Huawei Locomotion Dataset [1-2] will be used in an activity recognition challenge with results to be presented at the HASCA Workshop at Ubicomp 2020.

This third edition of the challenge follows on our very successful 2018 and 2019 challenges, which saw the participation of more than 40 teams [3-5].

The goal of this machine learning/data science challenge is to recognize 8 modes of locomotion and transportation (activities) from the inertial sensor data of a smartphone.

This 2020 edition focuses on recognising modes of transportation in a user-independent manner with an unknown phone position. More precisely, the goal is to recognize the user activity from data coming from the phone of the “test” user (a combination of user 2 and 3). The location of that phone on the “test” user is not specified. Training data is provided for a “train” user, with all the 4 phone positions available. One of these position will be identical to that of the “test” user. A small “validation” dataset is provided which includes data from the “test” user and all the 4 possible phone locations on the test user.

This webpage will point to the data for the train, validation and test dataset. In addition, all the data release in the past challenges and as preview can be used for training.

The participants will have to develop an algorithm pipeline that will process the sensor data, create models and output the recognized activities.

Please, check the new added Q&A section at the end of this page (Last update: 20/05/2020).

Prizes

- 800£

- 400£

- 200£

*Note: Prizes may increase subject to additional sponsors.

Deadlines

Registration via email: as soon as possible, but not later than 15.04.2020 30.04.2020Challenge duration: 05.04.2020 – 15.06.2020Submission deadline:15.06.2020 25.06.2020HASCA-SHL paper submission: 19.06.2020 29.06.2020- HASCA-SHL review notification:

03.07.202010.07.202022.07.2020 - HASCA-SHL camera ready submission:

17.07.202020.07.202029.07.2020 (HARD deadline) - HASCA workshop:

12.09.2020 - Release of the ground-truth of the test data: 13.09.2020

Registration

Each team should send a registration email to shldataset.challenge@gmail.com as soon as possible but not later than 15.04.202030.04.2020, stating the:

- The name of the team

- The names of the participants in the team

- The organization/company (individuals are also encouraged)

- The contact person with email address

HASCA Workshop

To be part of the final ranking, participants will be required to submit a detailed paper to the HASCA workshop. The paper should contain technical description of the processing pipeline, the algorithms and the results achieved during the development/training phase. The submissions must follow the HASCA format, but with a page limit between 3 and 6 pages.

Submission of predictions on the test dataset

The participants should submit a plain text prediction file (e.g. “teamName_predictions.txt”) for the testing dataset, corresponding to the sensor data in the testing dataset. The structure of the file should be the same as the label file in the training dataset. This means that the submitted file should contain a matrix of size 57573 lines x 500 columns corresponding to each sample in the testing dataset.

The participants should use the following format for the predictions file: “teamName_predictions.txt”. An example of submission is available here.

The participants’ predictions should be submitted online by sending an email to shldataset.challenge@gmail.com, in which there should be a link to the predictions file, using services such as Dropbox, Google Drive, etc. In case the participants cannot provide links using some file sharing service, they should contact the organizers via email shldataset.challenge@gmail.com, which will provide an alternate way to send the data.

To be part of the final ranking, participants will be required to publish a detailed paper in the proceedings of the HASCA workshop. The date for the paper submission is 29.06.2020. All the papers must be formatted using the ACM SIGCHI Master Article template with 2 columns. The template is available at TEMPLATES ISWC/UBICOMP2020. Submissions do not need to be anonymous.

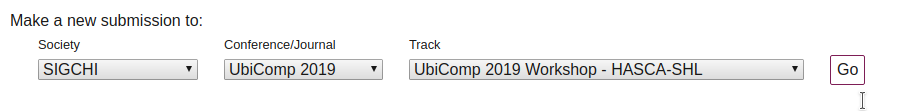

Submission is electronic, using precision submission system. The submission site is open at https://new.precisionconference.com/submissions (select SIGCHI / UbiComp 2020 / UbiComp 2020 Workshop – HASCA-SHL and push Go button). See the image below.

A single submission is allowed per team. The same person cannot be in multiple teams, except if that person is a supervisor. The number of supervisors is limited to 3 per team.

Dataset and format

The data is divided into three parts: train, validate and test. The data comprises of 59 days of training data, 6 days of validation data and 40 days of test data. The train, validation and test data was generated by segmenting the whole data with a non-overlap sliding window of 5 seconds.

The train data contains the raw sensors data from one user (user 1) and four phone locations (bag, hips, torso, hand). It also includes the activity labels (class label). The train data contains four sub-directories (Bag, Hips, Torso, Hand) with the following files in each sub-directory:

- Acc_*.txt (with * being x, y, or z): acceleration

- Gra_*.txt: gravity

- Gyr_*.txt: rate of turn

- LAcc_*.txt: linear acceleration

- Mag_*.txt: magnetic field

- Ori_*.txt (with * being w, x, y, z): orientation of the device in quaternions

- Pressure.txt: atmospheric pressure

- Label.txt: activity classes.

Each file contains 196072 lines x 500 columns, corresponding to 196072 frames each containing 500 samples (5 seconds at the sampling rate 100 Hz). The frames for the train data are consecutive in time.

The validation data contains the raw sensors data from the other two users (mixing user 2 and use 3) and four phone locations (bag, hips, torso, hand), with the same files as the train dataset. Each file in the validation data contains 28789 lines x 500 columns, corresponding to 28789 frames each containing 500 samples (5 seconds at the sampling rate 100 Hz). The validation data is extracted from the already released preview of the SHL dataset.

The test data comprises contains the raw sensors data from the other two users (mixing user 2 and use 3) and one phone location (unknown to the participants), with the same files as the train dataset but no class label. his is the data on which ML predictions must be made. The files contain 57573 lines x 500 columns (5 seconds at the sampling rate 100 Hz). In order to challenge the real time performance of the classification, the frames are shuffled, i.e. two successive frames in the file are likely not consecutive in time, and furthermore the jumping size is larger than the window size.

Download train data

- challenge-2019-train_torso (5.45GB) (this file is identical to the 2019 challenge)

- challenge-2019-train_bag (5.24GB) (this file is identical to the 2019 challenge)

- challenge-2019-train_hips (5.47GB) (this file is identical to the 2019 challenge)

- challenge-2020-train_hand (5.62GB) (this file is new for the 2020 challenge)

Download validation data

- challenge-2020-validation (3.03GB)

Download test data

challenge-2020-test (1.62GB)[15.06.2020: there is a bug in this testing data. The testing data has sensor data from 20 modalities (acc_x, acc_y, acc_z, gyr_x, …) and the data frames were permutated randomly. For each modality, the data frames should be permutated with the same permutation order. However, in this testing data, each modality was permutated with a different and random permutation order, which leads to unsynchronized sensor data and thus prevents from getting correct estimation results. We now fix the bug and update the testing data with a new link as below.]- challenge-2020-test-15062020 (1.62GB) [updated 15.06.2020]

Download example submission

This is an example of how the submission classification result should look like: challenge-2020-example_submission.

Ground truth of the test data (released on 13/09/2020)

Download the test labels and the permutations applied to the raw data of the test set (note: the recording days which were used to make up the train, validation and test sets are not yet included).

- challenge-2020-test-labels (394KB)

- challenge-2020-date: days of the complete dataset from which the challenge dataset was created. Check the note.txt within the archive for more information.

Rules

Some of the main rules are listed below. The detailed rules are contained in the following document.

- Eligibility

- You do not work in or collaborate with the SHL project (http://www.shl-dataset.org/);

- If you submit an entry, but are not qualified to enter the contest, this entry is voluntary. The organizers reserve the right to evaluate it for scientific purposes. If you are not qualified to submit a contest entry and still choose to submit one, under no circumstances will such entries qualify for sponsored prizes.

- Entry

- Registration (see above): as soon as possible but not later than 15.04.2020.

- Challenge: Participants will submit prediction results on test data.

- Workshop paper: To be part of the final ranking, participants will be required to publish a detailed paper in the proceedings of the HASCA workshop (http://hasca2020.hasc.jp/); The dates will be set during the competition.

- Submission: The participants’ predictions should be submitted online by sending an email to shldataset.challenge@gmail.com, in which there should be a link to the predictions file, using services such as Dropbox, Google Drive, etc. In case the participants cannot provide link using some file sharing service, they should contact the organizers via email shldataset.challenge@gmail.com, which will provide an alternate way to send the data.

- A single submission is allowed per team. The same person cannot be in multiple teams, except if that person is a supervisor. The number of supervisors is limited to 3 per team.

Q&A

Here a list of frequent questions received by the organizers. The list will be updated during the challenge. Last update: 20/05/2020

- Is the unknown position of the test set the same for User 2 and 3?

Yes, it is. The test set is made by a mixture of data of User 2 and 3, but the phone location is the same for both users. The location is unknown to the participants of the challenge.

Contact

All inquiries should be directed to: shldataset.challenge@gmail.com

Organizers

- Dr. Hristijan Gjoreski, University of Sussex (UK) & Ss. Cyril and Methodius University (MK)

- Dr. Lin Wang, Queen Mary University of London (UK)

- Prof. Daniel Roggen, University of Sussex (UK)

- Dr. Kazuya Murao, Ritsumeikan University (JP)

- Dr. Tsuyoshi Okita, Kyushu Institute of Technology (JP)

- Mathias Ciliberto, University of Sussex (UK)

- Paula Lago, Kyushu Institute of Technology (JP)

References

[1] H. Gjoreski, M. Ciliberto, L. Wang, F.J.O. Morales, S. Mekki, S. Valentin, and D. Roggen, “The University of Sussex-Huawei locomotion and transportation dataset for multimodal analytics with mobile devices,” IEEE Access 6 (2018): 42592-42604. [DATASET INTRODUCTION]

[2] L. Wang, H. Gjoreski, M. Ciliberto, S. Mekki, S. Valentin, and D. Roggen, “Enabling reproducible research in sensor-based transportation mode recognition with the Sussex-Huawei dataset,” IEEE Access 7 (2019): 10870-10891. [MOTION SENSOR DATA ANALYSIS OF THE DATASET]

[3] L. Wang, H. Gjoreski, K. Murao, T. Okita, and D.l Roggen, “Summary of the Sussex-Huawei locomotion-transportation recognition challenge,” in Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, pp. 1521-1530, 2018. [SHL 2018 SUMMARY]

[4] L. Wang, H. Gjoreski, M. Ciliberto, S. Mekki, S. Valentin, and D. Roggen, “Benchmarking the SHL recognition challenge with classical and deep-learning pipelines,” in Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, pp. 1626-1635, 2018. [SHL 2018 BENCHMARK]

[5] L. Wang, H. Gjoreski, M. Ciliberto, P. Lago, K. Murao, T. Okita, and D. Roggen, “Summary of the Sussex-Huawei locomotion-transportation recognition challenge 2019,” in Proceedings of the 2019 ACM International Joint Conference and 2019 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, pp. 849-856, 2019. [SHL 2019 SUMMARY]

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Competition Results

Summary paper and poster:

Summary of the Sussex-Huawei Locomotion-Transportation Recognition Challenge 2020

Lin Wang, Hristijan Gjoreski, Mathias Ciliberto, Paula Lago, Kazuya Murao, Tsuyoshi Okita, Daniel Roggen.

[PDF][Poster]

Accepted papers for presentation as posters:

Combining LSTM and CNN for mode of transportation classification from smartphone sensors.

Björn Friedrich, Carolin Lübbe.

[PDF][Poster][Video]

Activity recognition for locomotion and transportation dataset using deep learning.

Chan Naseeb, Bilal Al Saeedi.

[PDF][Poster][Video]

Where are you? Human activity recognition with smartphone sensor data.

Gulustan Dogan, Seref Recep Keskin, Iremnaz Cay, Sinem Sena Ertas, Aysenur Gencdogmus.

[PDF][Poster][Video]

Human activity recognition using multi-input CNN model with FFT spectrograms.

Kei Yaguchi, Chihiro Ito, Wataru Miyazaki, Kazukiyo Ikarigawa, Yuki Morikawa, Ryo Kawasaki, Yusuke Kyokawa, Eisaku Maeda, Masaki Shuzo.

[PDF][Poster][Video]

Smartphone location identification and transport mode recognition using an ensemble of generative adversarial networks.

Lukas Gunthermann.

[PDF][Poster][Video]

A multi-view architecture for the SHL challenge.

Massinissa Hamidi, Aomar Osmani, Pegah Alizadeh.

[PDF]

UPIC: user and position independent classical approach for locomotion and transportation modes recognition.

Md. Sadman Siraj, Omar Shahid, Md. Ahasan Atick Faisal, Farhan Fuad Abir, Md. Atiqur Rahman Ahad, Sozo Inoue, Tahera Hossain.

[PDF][Poster][Video]

Tackling the SHL recognition challenge with phone position detection and nearest neighbour smoothing.

Peter Widhalm, Philipp Merz, Liviu Coconu, Norbert Brändle.

[PDF][Poster][Video]

Ensemble learning for human activity recognition.

Sekiguchi Ryoichi, Abe Kenji, Yokoyama Takumi, Kumano Masayasu.

[PDF][Poster][Video]

Ensemble approach for sensor-based human activity recognition.

Sunidhi Brajesh, Anjan Ragh Kotagal Shivaprakash, Aswathy Mohan, Indraneel Ray.

[PDF][Poster][Video]

Hierarchical Classification Using ML/DL for Sussex-Huawei Locomotion-Transportation (SHL) Recognition Challenge.

Yi-Ting Tseng, Yi-Hao Lin, Hsien-Ting Lin, Fong-Man Ho, Chia-Hung Lin.

[PDF][Poster][Video]

Accepted papers for full presentation:

IndRNN based long-term temporal recognition in the spatial and frequency domain.

Shuai Li, Beidi Zhao, Yanbo Gao.

[PDF][Video]

Tackling the SHL Challenge 2020 with person-specific classifiers and semi-supervised learning.

Stefan Kalabakov, Simon Stankoski, Nina Reščič, Andrejaana Andova, Ivana Kiprijanovska, Vito Janko, Martin Gjoreski, Mitja Luštrek.

[PDF]

DenseNetX and GRU for the Sussex-Huawei locomotion-transportation recognition challenge.

Yida Zhu, Runze Chen and Haiyong Luo.

[PDF][Video]